AI Malware System

Fast API Endpoint!. This project examines, analyses the malware statically & dynamically using conventional strategies and also apply machine learning algorithms lke lightgbm, svm and deep learning algorithms like CoAtNet, LSTM. FrontEnd App is Antivirus built on Tauri

Introduction

We have created an AI antivirus system which identifies and removes malware threats from a computer system. This program uses a variety of techniques and machine learning models to examine and spot dangerous behavioral patterns in files, programs, and network traffic. Antivirus software can continuously adapt and enhance its detection abilities thanks to the usage of AI technology, keeping up with the ever-changing threat landscape. AI antivirus software can accurately diagnose and stop malware in real-time by utilizing massive datasets and smart analytics, reducing the risk of infection and data loss. AI antivirus software may include elements like automated threat response, behavior-based analysis, and cloud-based security in addition to its detection capabilities to offer complete security for both individuals and organizations. All things considered, AI antivirus software is a crucial instrument for preserving the security and safety of computer systems in the current digital era.

Framework

The framework of the system is given. It consists of Front End user, Event Hub, SQL database, Blob storage, Power BI, and Visualization.

Methodology

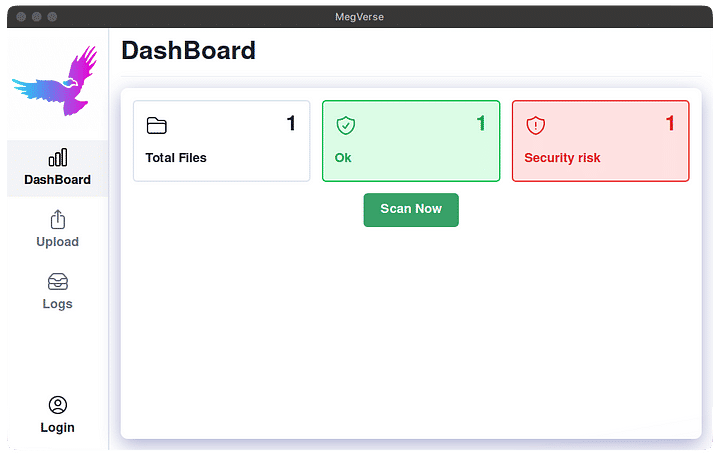

Front End App

A Linux dashboard with the option to scan files.

Front End Application

Malware data from user to event hub

Data from the frontend application, the user input must be collected in the first stage. Numerous methods, such as form submissions and device-to-cloud telemetry, can be used to do this. Before being submitted to the Event Hub, the captured data might need to be preprocessed. Preparing the data for ingestion into the Event Hub may require data validation, normalization, or transformation.

The Event Hub client’s initialization on the front-end application comes next. Setting up a connection to the Event Hub, creating security credentials, and defining any relevant parameters, like partitioning or batching, are all necessary steps in this process. The frontend application can begin feeding data into the Event Hub using the client’s transmit method or a comparable API once the Event Hub client has been initialized.

Data can be processed in the cloud using a variety of Azure services after it has been ingested into the Event Hub. This may involve leveraging services like Stream Analytics and Azure Functions to analyze, transform, or aggregate data in real time. The processed data can then be stored for subsequent analysis or retrieval in Azure services like Cosmos DB or Blob Storage.

Using different tools, such as Power BI, Azure Data Explorer, or customized web applications, the processed data can then be visualized and reported. Charts, graphs, dashboards, and other visual tools can be used in data visualization to improve user comprehension and interaction. Businesses can gather, process, and analyze data from their frontend applications by following these processes to obtain insights and make defensible decisions based on the data they collect.

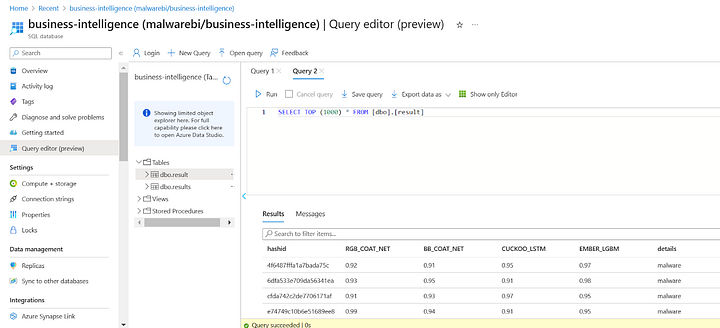

Data streaming from Event Hub to Azure SQL Database

First, use the Azure portal and the Azure SQL Database service to set up an Event Hub and a SQL Server instance in Azure. You can accomplish this by carefully following the step-by-step instructions.

Make a job for Azure Stream Analytics next. You can specify the data’s input and output streams within the job. The Event Hub will serve as the input stream in this scenario, while the SQL Server instance will serve as the output stream. By specifying a SQL Server output in the job parameters, you may set up the SQL Server instance as an output sink for Stream Analytics.

You can build a query in the Stream Analytics task that extracts data from the Event Hub and translates it to the SQL Server after defining the input and output streams. The query can be made using syntax resembling SQL or it can use functions and operators provided by Stream Analytics to manipulate the data.

You can start the Stream Analytics job after the query has been configured.

Based on the query you specified, the job will continuously read data from the Event Hub and publish it to the SQL Server.

To make sure the Stream Analytics job is operating properly, it is crucial to keep an eye on it. The Azure interface allows you to monitor job metrics and logs as well as set up alerts to notify you of any job-related difficulties. These procedures will help you create a pipeline that sends data from an Event Hub to a SQL Server instance for processing and analysis.

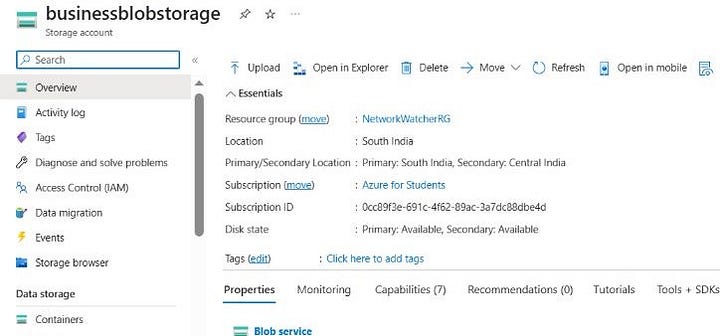

Data Streaming from Event Hub to Blob Storage

In the Azure portal, you must first create a Blob Storage account. The data sent from the Event Hub will be kept in this account. You can construct a Blob Storage container inside the account after it has been created. The data kept in Blob Storage will be arranged in this container.

The Event Hub capture must then be set up to transfer data to Blob Storage. To accomplish this, go to the Event Hub namespace, choose the Event Hub from which you want to capture data, and turn on capture to Blob Storage. The Blob Storage account and container to which the data should be delivered can then be specified.

You must choose Blob Storage as the final location for the collected data after configuring capture. The Blob Storage account name and container name must be supplied, together with a shared access signature (SAS) token that has the necessary write permissions to the Blob Storage container.

Event Hub will begin gathering data and delivering it to Blob Storage as soon as the capture is configured and Blob Storage is chosen as the destination. The information will be kept as Avro files in the chosen Blob Storage container.

Finally, you can use applications like Azure Stream Analytics or Azure Databricks to view the saved data. These tools can be used to analyze the data after reading the Avro files from Blob Storage. These procedures will show you how to store data from an Event Hub in Blob Storage so that it may be used for upcoming processing and analysis.

Data Streaming from Azure SQL Database to Power BI and Visualization

The first step in setting up a connection between Power BI and an Azure database is to choose the Azure SQL Database connector from the “Get Data” option in Power BI. After doing this, you must enter the server name, database name, and login credentials for your Azure database to establish a connection.

You can choose the tables and columns you want to import into Power BI after making a successful connection. Depending on your requirements, you can select to import the data using the “Import” or “DirectQuery” options.

To better understand and analyze the data, you may use Power BI to build reports and visualizations after importing the data. Charts, graphs, and tables are only a few visualization tools you might use to present the data in an intelligible fashion.

Your reports and visualizations can be published to the Power BI service once they have been created in Power BI Desktop. This will allow you to share your reports with others and access them from anywhere.

By giving them access to the Power BI service, you may also share your reports with other individuals. Additionally, you can quickly integrate your results into other programs or websites by using the Power BI embedded API.

Visualizing the efficiency of each model: Pie chart (left) and Bar graph (right)

Conclusion

In conclusion, compared to conventional antivirus software, AI-powered antivirus solutions have several benefits. These systems can swiftly identify and respond to new and emerging threats, including malware that was not previously known to exist and zero-day assaults, by utilizing machine learning algorithms to analyze vast amounts of data. Additionally, since they can automate many of the steps involved

Acknowledgements

Special Thanks to Daniel Anantha Geethan, Tarun Kumar P, Arjun V K and Arjun P for their contribution in this project and article.